{ Explore | Examine | Expose | Explain } your model with the explabox!

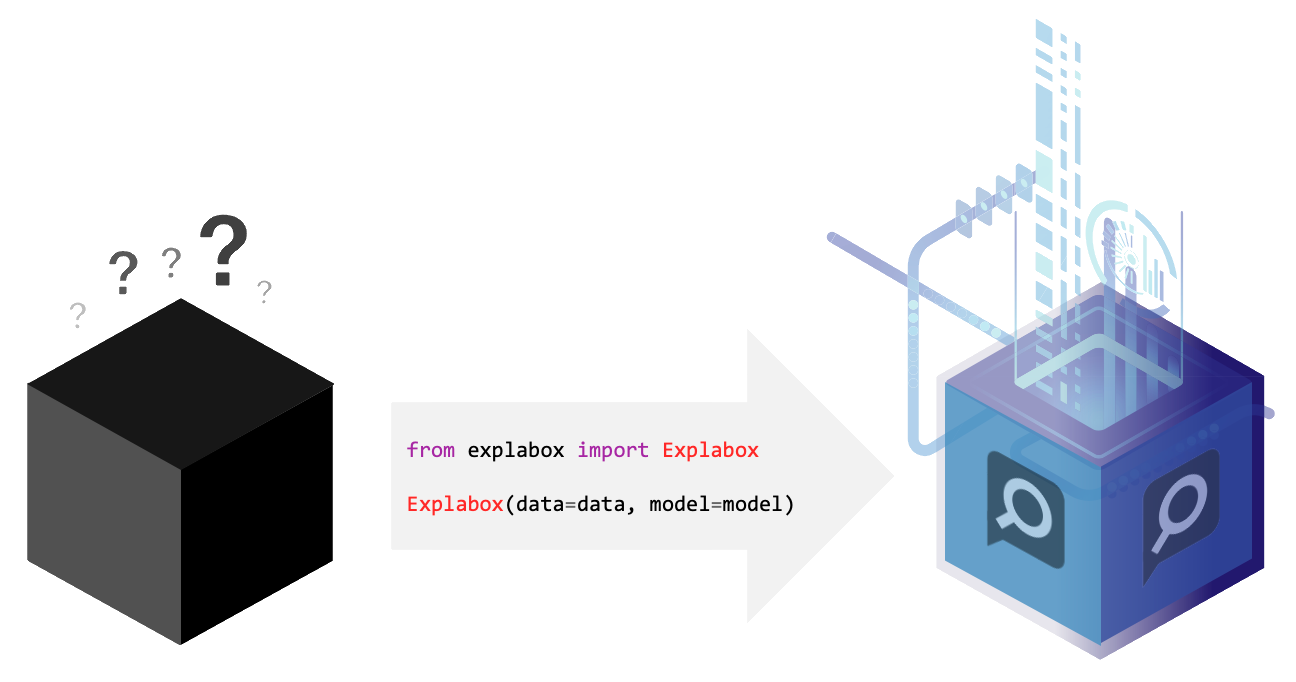

The explabox aims to support data scientists and machine learning (ML) engineers in explaining, testing and documenting AI/ML models, developed in-house or acquired externally. The explabox turns your ingestibles (AI/ML model and/or dataset) into digestibles (statistics, explanations or sensitivity insights)!

The explabox can be used to:

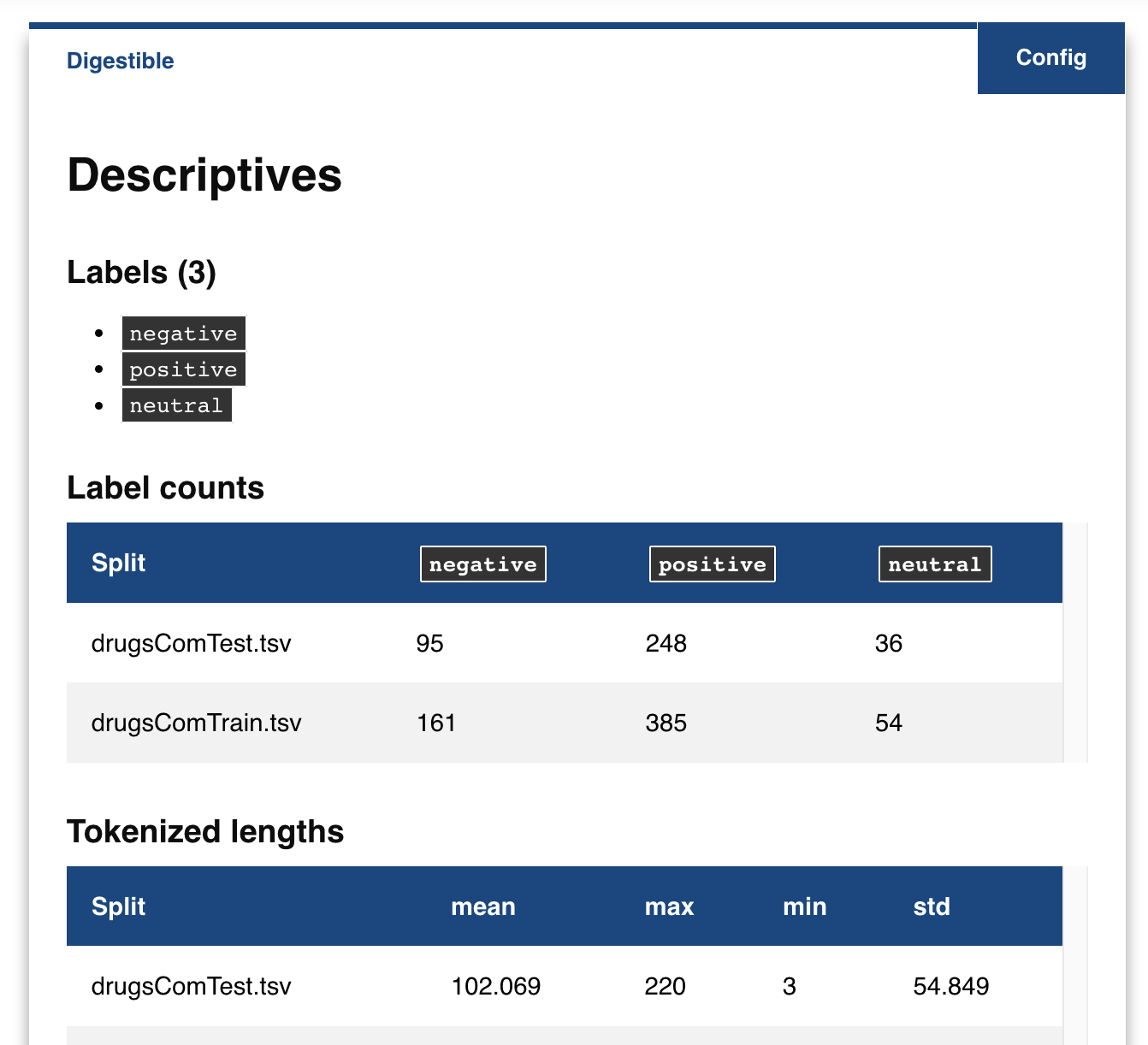

Explore: describe aspects of the model and data.

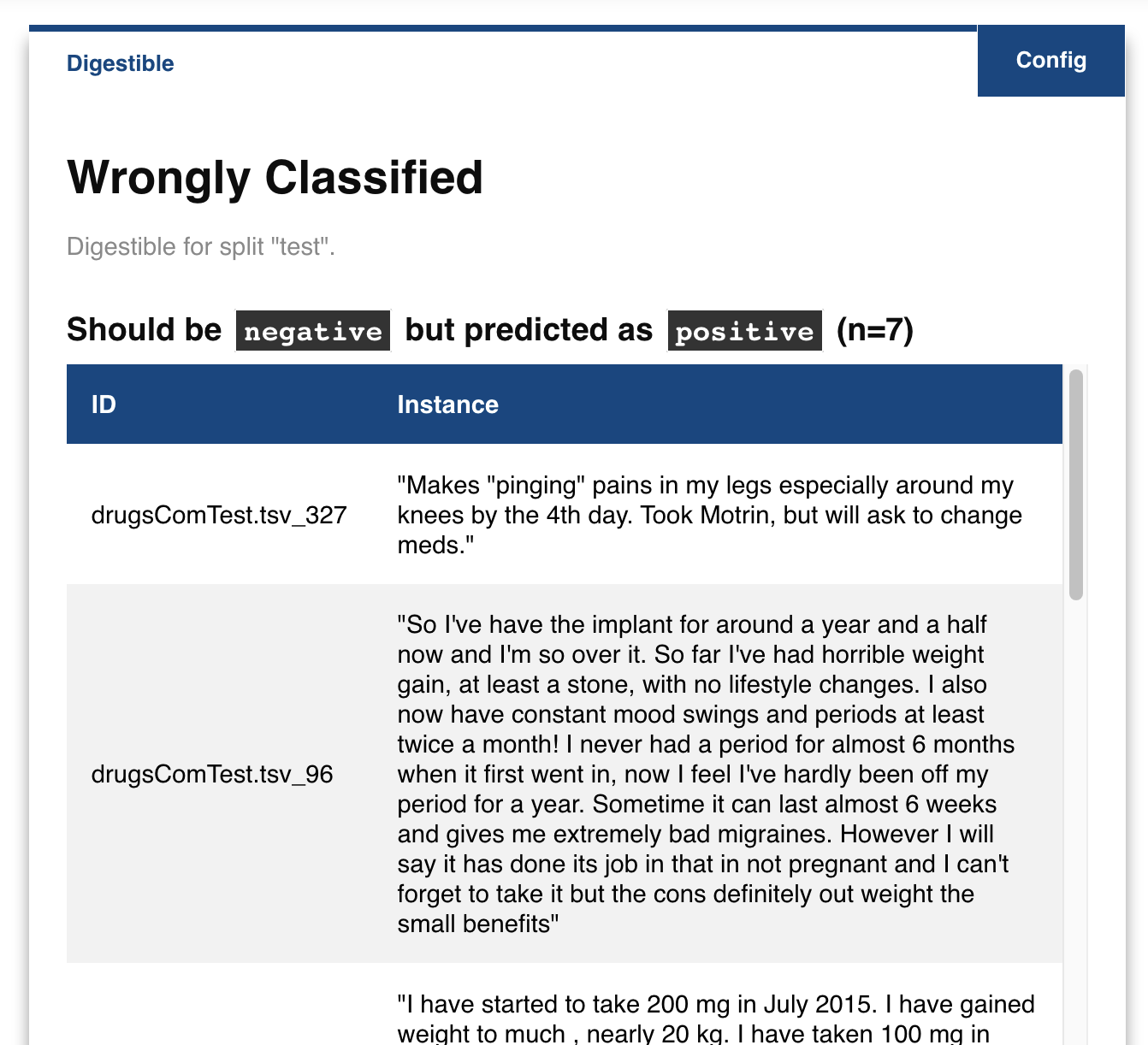

Examine: calculate quantitative metrics on how the model performs.

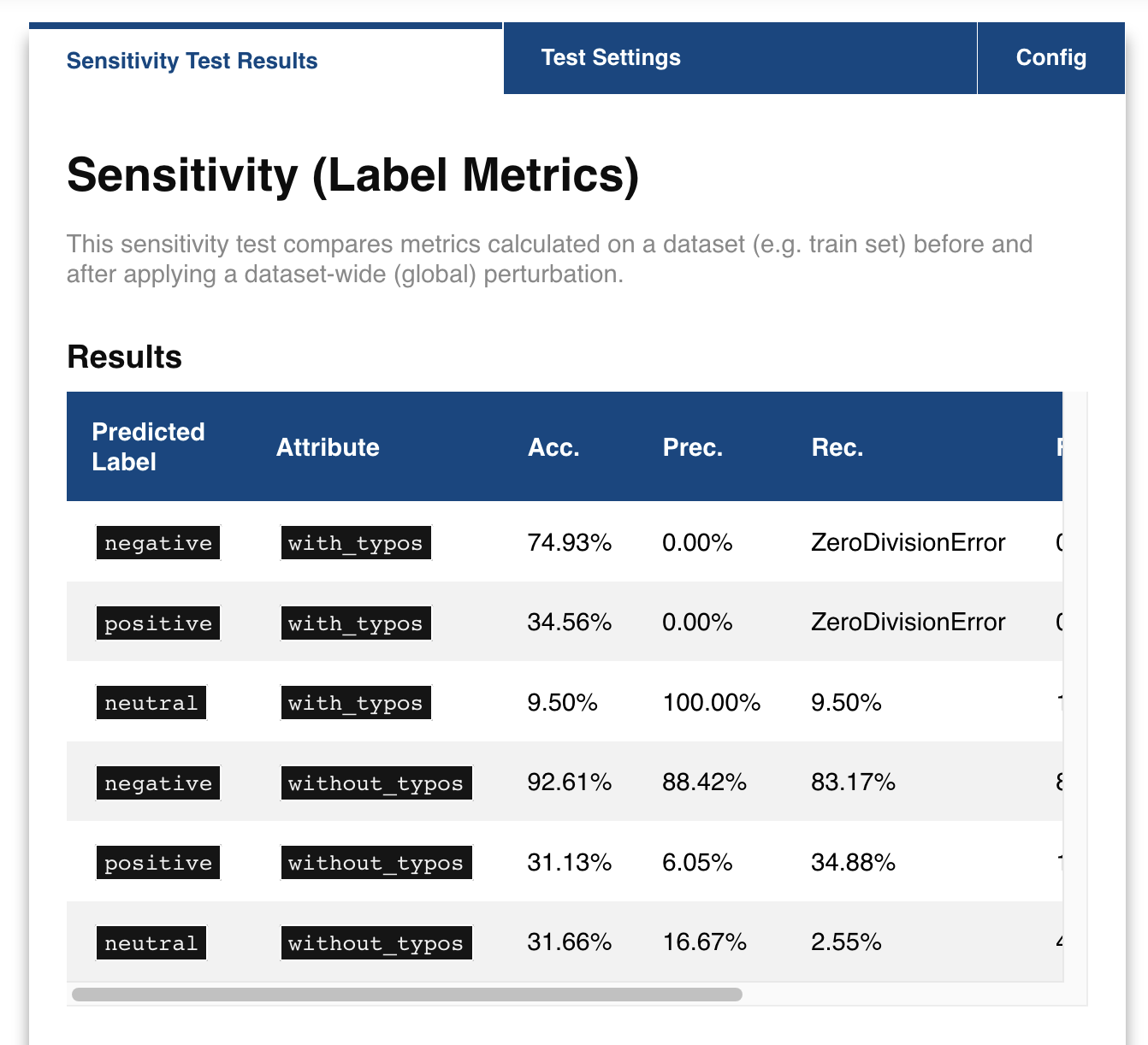

Expose: see model sensitivity to random inputs (safety), test model generalizability (e.g. sensitivity to typos; robustness), and see the effect of adjustments of attributes in the inputs (e.g. swapping male pronouns for female pronouns; fairness), for the dataset as a whole (global) as well as for individual instances (local).

Explain: use XAI methods for explaining the whole dataset (global), model behavior on the dataset (global), and specific predictions/decisions (local).

A number of experiments in the explabox can also be used to provide transparency and explanations to stakeholders, such as end-users or clients.

Note

The explabox currently only supports natural language text as a modality. In the future, we intend to extend to other modalities.

Quick tour

The explabox is distributed on PyPI. To use the package with Python, install it (pip install explabox), import your data and model and wrap them in the Explabox:

>>> from explabox import import_data, import_model

>>> data = import_data('./drugsCom.zip', data_cols='review', label_cols='rating')

>>> model = import_model('model.onnx', label_map={0: 'negative', 1: 'neutral', 2: 'positive'})

>>> from explabox import Explabox

>>> box = Explabox(data=data,

... model=model,

... splits={'train': 'drugsComTrain.tsv', 'test': 'drugsComTest.tsv'})

Then .explore, .examine, .expose and .explain your model:

>>> # Explore the descriptive statistics for each split

>>> box.explore()

>>> # Show wrongly classified instances

>>> box.examine.wrongly_classified()

>>> # Compare the performance on the test split before and after adding typos to the text

>>> box.expose.compare_metrics(split='test', perturbation='add_typos')

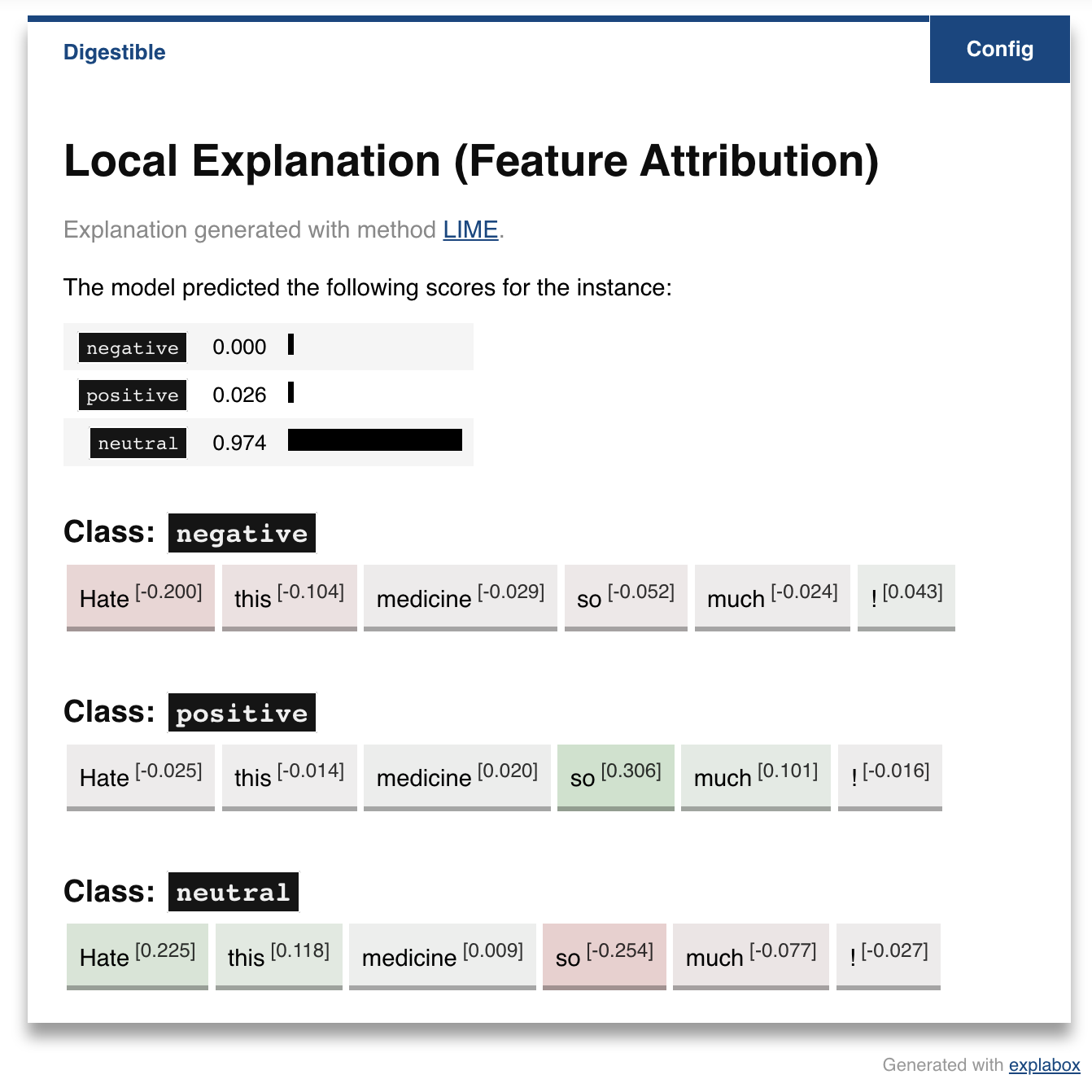

>>> # Get a local explanation (uses LIME by default)

>>> box.explain.explain_prediction('Hate this medicine so much!')

Using explabox

- Installation

Installation guide, directly installing it via pip or through the git.

- Example Usage

An extended usage example, showcasing how you can explore, examine, expose and explain your AI model.

- Overview

Overview of the general idea behind the explabox and its package structure.

- Explabox API reference

A reference to all classes and functions included in the explabox.

Development

- Explabox @ GIT

The git includes the open-source code and the most recent development version.

- Changelog

Changes for each version are recorded in the changelog.

- Contributing

A guide to making your own contributions to the open-source explabox package.

Citation

…